Database Analytics in 2026: A Guide for Forward-Thinking Teams

Database analytics is a strategic business process that examines extensive collections of structured data stored in databases to uncover patterns, trends, and insights that inform profitable business decisions.

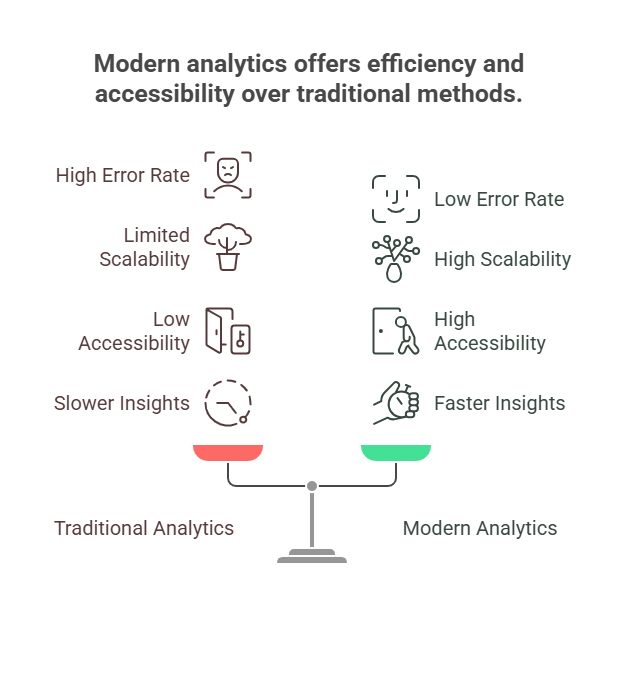

Analysing databases in the past often involved manual queries, time-consuming data processing, and exporting data across multiple systems. Modern analytics tools incorporate technologies like artificial intelligence (AI) and machine learning (ML), enabling teams to analyse and generate insights directly within the database, automate processes, and cut operational costs.

According to McKinsey Global Institute, data-driven organisations are 23x more likely to gain new customers and 19x more likely to be profitable. In this guide, we’ll explore the evolution of database analytics, the role of AI in modern analytics, and how you can leverage it in your business in 2026.

The Current State of Database Analytics

According to IDC, the current state of analytics has several problems. The average data professional uses between three and seven tools to manage data, including spreadsheets. This process requires a significant amount of time and is prone to errors.

The traditional method of analysing data requires a considerable amount of manual effort and technical expertise. There is pressure on the user to generate an accurate SQL query for the database, which facilitates better analysis. This poses a lot of challenges for teams.

1. Human error: Manually inputting data and querying databases can be tedious. It often leads to omissions and errors, affecting the overall analysis.

2. Scalability issues: Manual analysis is often tied to the capacity of the data professional. And there’s a limit to that capacity. So when data volume grows, manual processes struggle to scale.

3. Lower accessibility for non-technical users: Traditional analytics requires niche expertise. This restricts access to data analysts, engineers, and scientists within the team. Non-technical users are forced to wait till the “experts” prepare a presentation.

4. Slower time to insights: Manual SQL queries take time. A data analyst will most likely spend as much as 80% of their time cleaning data. Before the entire process is completed, the market has moved, and insights become less valuable.

Common Databases and What They Are Used For

Various types of databases serve different business needs. Let's look at some of them:

SQL Databases (Relational databases)

SQL stands for Structured Query Language, a standard language used to manage and query relational databases. SQL databases organise data into tables made up of rows (records) and columns (attributes), making them ideal for structured data and highly relational use cases.

They remain the backbone of many business systems today, especially in customer relationship management (CRM), content management systems (CMS), financial systems, and enterprise resource planning (ERP). Popular examples include MySQL, PostgreSQL, and Oracle.

MySQL has consistently been the most widely adopted database over the past two decades. Its popularity stems from several advantages: it’s free and open-source, relatively easy to learn, highly reliable, and versatile enough to support a wide range of business applications.

NoSQL Databases (Not Only SQL)

NoSQL or “not only SQL” databases don’t follow the strict relational structure of SQL databases. They are more flexible and can handle a variety of data structures, which is why they’re used for handling semi-structured and unstructured data, e.g., videos, text documents, audio files, etc. NoSQL databases are commonly applied in web applications, the Internet of Things (IoT), and mobile applications. Examples are MongoDB and Apache Cassandra.

Time-series Databases (TSDB)

Time-series databases store and query data with timestamps. It features specialised query functions for time-based analysis, data retention policies, and downsampling (aggregating data over time).

TSDBs are commonly used in monitoring systems, IoT data, financial data, industrial automation, weather data, and energy management. Examples include InfluxDB and TimescaleDB.

Graph Databases

Graph databases are efficient for querying and analysing relationships. They are designed to primarily store data as nodes and edges, i.e., using graph theory. In other words, they store data as entities and the relationships between entities. Graph databases use graph-specific query languages, such as Cypher or Gremlin.

They are common in social networks, recommendation engines, and supply chain management. Examples are Neo4j and Amazon Neptune.

Vector Databases

These databases store, index, and search high-dimensional vector embeddings. These embeddings are numerical representations of data, such as texts and images. They are often used in recommendation systems, image and video retrieval, and anomaly detection. Examples include Pinecone and Weaviate.

The Shift Toward Automation

Database analytics has developed significantly since the 1950s, when the concept of automating data processing first emerged. A quick summary of the journey of database analytics looks like this:

- 1970s: Business Intelligence (BI) emerged.

- 1990s: Data warehousing and online analytical processing (OLAP) became established in data analytics.

- 2000s: Big data technologies and advanced analytics techniques emerged.

- 2010s: Data analytics became more accessible to organisations of all sizes because of advancements in cloud computing and open-source technologies.

Today, analytics is now infused with Artificial Intelligence and Machine Learning technologies.

Rise of Self-serve Analytics Platforms and Automated Data Pipelines

Before low-code analytics tools gained popularity, data analysis was primarily the domain of IT professionals with coding expertise. To make data more accessible, early tools like Microsoft Excel introduced basic self-service capabilities, enabling individuals to perform simple analyses without relying entirely on technical teams.

In the early 2000s, self-serve analytics truly gained momentum with the emergence of visualisation platforms like Qlik and Tableau. These tools empowered business users across departments to access insights, build dashboards, and make data-driven decisions without writing SQL.

As the need for real-time decision-making grew, automated data pipelines emerged. These pipelines streamlined the flow of data from extraction to transformation and loading (ETL) with minimal manual effort. This automation laid the groundwork for faster, more responsive analytics.

Today, platforms like BlazeSQL build on this foundation by combining automated pipelines with natural language querying. They enable businesses to ask their databases complex questions in plain English and instantly access insights powered by up-to-date data. Leveraging real-time pipelines ensures decisions are made with current, reliable information, bridging the gap between business curiosity and technical execution.

The In-Database Architectural Shift

Traditionally, data had to be exported from databases to BI or analytics tools for processing—a process that introduced delays and increased complexity.

However, the in-database architecture changed that.

In-database analytics refers to the ability to perform data analysis directly within the database system, without extracting or moving data to external analytics platforms. This architectural shift significantly reduces data latency, enhances system performance, and minimises security risks associated with data duplication or transfer.

This means operations such as filtering, aggregating, predictive modelling, and even machine learning can now be executed where the data lives. The advancements in database engines and support for built-in analytics functions in platforms like Snowflake, PostgreSQL, and BigQuery made this possible.

For businesses, the benefits are immediate and strategic. Decisions can be made on the most current data, enabling a shift from reactive to proactive operations. Tools like BlazeSQL take full advantage of this by allowing users to query live data in plain English, without waiting for exports or reports. This reduces the gap between data availability and action, making analytics more responsive to real-world dynamics.

As more organisations adopt real-time and event-driven architectures, in-database analytics is becoming critical for everything from fraud detection and customer personalisation to inventory management and operational forecasting.

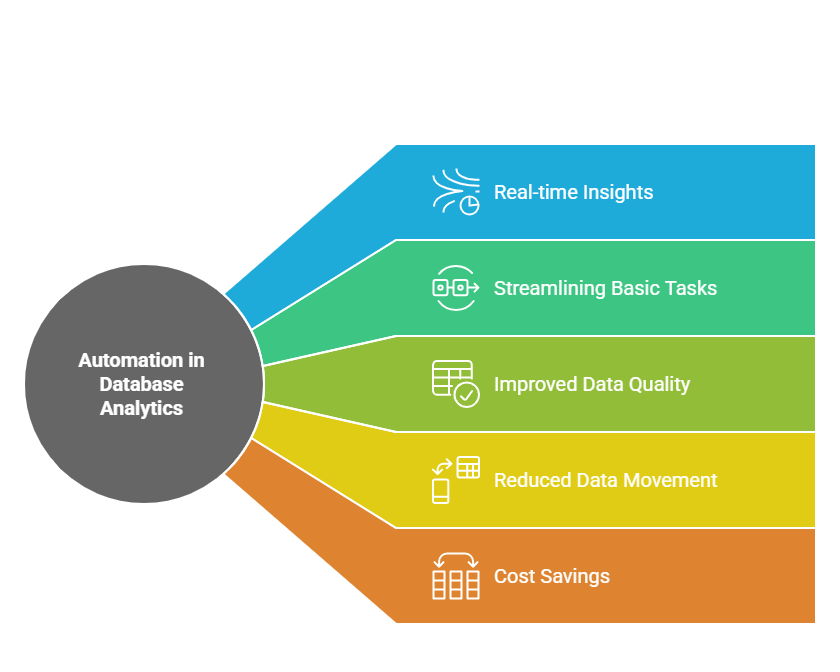

Benefits of Automation in Database Analytics

1. Real-time insights: Automation enables businesses to analyse data as soon as it is collected. This clears the pipeline quickly and produces insights faster.

2. Streamlining basic tasks: Automation handles repetitive tasks, improving operational efficiency.

3. Improved data quality: Automation reduces the need for manual tasks. This means reduced human error and more reliable data for analysis.

4. Reduced data movement: Data teams no longer need to export large datasets to different tools. Automation can process and analyse data directly within the database, speeding up workflows.

5. Cost savings: Automation replaces manual labour and the need for specialised skills. This reduces operational costs and optimises resource usage.

Enablers of Automation in Database Analytics

Some enablers of automation in analytics include:

1. Cloud platforms: Cloud providers such as Microsoft Azure, Amazon Web Services (AWS), and Google Cloud Platform (GCP) offer the on-demand scalability and processing power required for modern data workloads. They support elastic compute resources, managed storage, and integrated services like data warehouses (e.g., Azure Synapse, BigQuery, Redshift) that are optimised for automated, large-scale analytics. Cloud-native features like serverless computing and event-driven architectures further support real-time automation.

2. ETL/ELT automation tools: Modern ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) tools, such as Apache Airflow, dbt, Fivetran, and Talend, enable organisations to build and schedule data pipelines with minimal coding. These tools automatically handle job orchestration, dependency management, error logging, and retries, making pipelines more reliable and maintainable. ELT, in particular, takes advantage of cloud data warehouse compute for transformations, speeding up workflows and improving efficiency.

3. Metadata management systems: Metadata systems like Apache Atlas, Alation, and Informatica enable better data governance by cataloguing datasets, tracking data lineage, and providing semantic context. With well-managed metadata, automation tools can accurately interpret data structure, enforce policies, and apply transformations consistently. This helps with data discovery, impact analysis, and auditability in compliance-driven environments.

4. APIs and Integration Frameworks: APIs and integration tools such as RESTful services, webhooks, and middleware platforms (like Zapier or Apache Nifi) allow systems to communicate seamlessly. These enable real-time data triggers, automated data ingestion, and interoperability between applications, which are critical for end-to-end automation.

5. AI and Machine Learning: AI-driven analytics platforms integrate machine learning models to automate anomaly detection, forecasting, and data cleaning. These tools reduce the time analysts spent on repetitive tasks and surface insights proactively, enhancing decision-making speed and accuracy.

AI in Database Analytics: How Has the Game Changed?

AI is no longer a standalone domain; it is increasingly embedded within various technologies and sectors. In database analytics, AI is transforming analytics from just telling businesses what happened to what will happen. This has increased the strategic relevance of analytics for businesses.

According to a survey, 80% of retail executives plan to use automated intelligent systems by 2025 and expect a growth of 10% or more in annual revenue.

Here are several ways AI is changing the game:

1. Natural language querying: The traditional way of asking a database for insights, or querying, involves writing complex SQL queries. The problem here is that not everyone knows how to write SQL code.

AI, through Natural Language Processing (NLP), enables users to query a database using plain English. An AI-powered analytics platform like BlazeSQL will interpret the natural language input, translate it into an accurate database query, and return relevant results to the user.

2. Predictive analytics: Traditional analytics mostly provides insights that move businesses from the past to the present. With AI and Machine Learning (ML) algorithms in modern analytics workflows, businesses can now get insights that move them from the past to the present and the future.

These algorithms' predictive capacity helps businesses analyse historical data to identify patterns, predict potential risks, build models that forecast future trends, and even recommend optimal actions. It moves analytics from describing what happened to anticipating what will happen.

3. Auto-insights and Anomaly detection: Database analytics heavily relied on human input before the rise of AI and automation. With the introduction of AI, algorithms can automatically detect correlations and statistically relevant patterns in data. They can also automatically flag deviations from expected behaviour or spot emerging trends.

Emerging technologies

Here’s how AI-powered analytics tools are being used to streamline workflows and enhance the efficiency of data teams:

1. AI copilots for data teams: AI-powered copilots are emerging as the go-to intelligent assistants for data professionals. Tools like BlazeSQL and Microsoft Copilot for Power BI exemplify this trend, helping users generate and optimise SQL queries with ease. These copilots understand the context of what a user is trying to achieve and suggest the optimal paths to get the desired results.

2. Machine Learning-driven query optimisation: Analytic tools now have ML models embedded that can analyse historical query patterns, data characteristics, and system resource utilisation to recommend intelligent query optimisation and resource allocation.

3. Generative AI for creating visualisations and narratives: Generative AI utilises complex machine learning models to generate realistic text, images, audio, video, 3D models, and more. Analytics tools like BlazeSQL make use of generative AI to suggest the most relevant visualisations for business queries and generate narratives in plain English, helping businesses understand the insights gained from the data.

Strategic implications for businesses

From retail and healthcare to finance and logistics, database analytics is transforming how organisations operate. With the rise of AI and automation, businesses across industries can now harness data at scale, not just for reporting, but for real-time decision-making, personalisation, forecasting, and innovation.

Here are some of the key strategic implications for modern businesses:

1. Democratisation of data access and insights: Non-technical users can now interact directly with data and access insights without requiring technical skills. With tools like BlazeSQL, anyone can query databases and gain insights using plain English.

2. A shift in skill requirements: AI and automation have made insights generation much faster and easier. Companies will now need expertise in skills like interpreting insights and strategic thinking.

3. Governance and ethical considerations: Implementing AI in database analytics means businesses must adopt stricter governance and encryption techniques and comply with regulatory frameworks.

4. Focus on data literacy: Self-service and AI-powered analytics allow a much wider audience to access data directly. Businesses must now invest in data literacy programs for non-technical employees to fully maximise the benefits of these tools.

5. Faster innovation cycle: Automation means analytics processes can now happen at a faster rate. Businesses can now experiment more, explore different analytical techniques, and try out various business models more quickly. All these allow businesses to develop innovations faster.

Future Outlook: Where is Database Analytics Headed?

The future of database analytics looks promising, with many emerging technologies transforming the way businesses interact and gain insights from data.

According to Gartner, the adoption of AI and ML in analytics is expected to grow annually by 40% in 2025. These technologies will expand the scope of predictive analytics and natural language processing (NLP) applications.

Database analytics will see a rise in hybrid cloud solutions as the need for flexible analytics increases. These cloud solutions enable businesses to access multiple cloud services, improving security, efficiency, and scalability. According to Forbes, data storage and computing will become more resilient to business needs as hybrid and multi-cloud strategies gain traction.

Democratisation and accessibility tools for data analysis will continue to evolve, empowering non-technical users as the need for data literacy grows. Digit reports that democratisation and accessibility are going from the domain of the few to ubiquity.

Edge computing is already solving problems for industries such as autonomous vehicles and industrial automation, which require real-time analytics. Instead of sending data to a central cloud, it processes information closer to the source, reducing latency and enabling faster decision-making. As more industries demand instant insights, edge computing will continue to gain traction. According to IDC, by 2025, over 75% of enterprise-generated data will be processed at the edge rather than in traditional cloud environments.

Fully Automated & AI-powered Database Analytics with BlazeSQL

Imagine you had a highly skilled data analyst on your team who can generate complex SQL queries in seconds, works 24/7, and never makes a mistake. Yes, that’s BlazeSQL: an AI-powered analytics tool that connects directly to over 13 databases to deliver insights on demand.

With BlazeSQL, non-technical teams can enjoy instant self-service analytics, lightning-fast query generation, personalised dashboards, proactive intelligence, and enterprise-grade security.

BlazeSQL prioritises data protection and compliance. It never stores your data, adheres to strict encryption standards, and integrates seamlessly with role-based access controls, ensuring your sensitive business data stays safe and governed.

For users who prefer local environments, BlazeSQL offers a powerful desktop version. This allows secure, offline analysis directly from your machine without compromising speed or capability.

Want to see BlazeSQL in action?

Frequently asked questions

What is database analytics? Database analytics is the process of analysing structured data stored in databases to uncover patterns, trends, and actionable insights that inform strategic business decisions. It often involves querying, aggregating, and visualising data to answer specific questions or track key metrics.

Why are SQL databases still widely used for analytics? SQL databases remain popular because they are reliable, scalable, and optimised for structured data. SQL is a robust and standardised language for data querying, and most BI and analytics tools are built to work seamlessly with SQL databases, making them the backbone of many modern analytics stacks.

Is in-database analytics better than exporting data to external tools? In many cases, yes. In-database analytics minimises data movement, enhances performance, and improves security by allowing analysis to happen where the data lives. It also supports real-time decision-making and simplifies architecture, especially when paired with tools like BlazeSQL that operate natively within the database layer.

What role does AI play in modern database analytics? AI enhances database analytics by automating tasks such as query generation, anomaly detection, forecasting, and insight delivery. AI tools like BlazeSQL allow users to interact with data using natural language, democratising access to analytics and speeding up decision-making with no coding or technical expertise required.

What are the best tools for automating database analytics? Top tools include BlazeSQL for AI-powered querying and dashboard creation, dbt for transformation logic, Fivetran or Stitch for data integration, and platforms like Snowflake or BigQuery for scalable, cloud-native analysis. The best stack often depends on your data volume, team structure, and business needs.

What industries benefit most from database analytics? Virtually all industries benefit, including retail (customer behaviour analysis), finance (fraud detection, portfolio monitoring), healthcare (patient trend analysis), manufacturing (supply chain optimisation), and SaaS (user engagement and churn analysis). AI-powered analytics speeds up impact across all these sectors.

Additional Reading

- What Is Data and Analytics? - by Gartner

- Top 5 SQL Tools for Data Analysis - by BlazeSQL

- 10 Eye-Opening Data Analytics Statistics for 2025 - by Edge Delta