3 LLM-powered SQL Agents for BI & Data Analytics

The rise of large language models (LLMs) is reshaping how we work across many domains, from writing code to automating customer support. In the data space, one of the impactful applications is the LLM SQL agent.

An LMM SQL agent is a tool that converts natural language questions into SQL queries, enabling faster and easier data access. It is an intelligent assistant that understands business questions phrased in everyday language and generates accurate SQL queries to query a company’s database. These agents combine language understanding, knowledge of data schemas, and contextual awareness to deliver precise and executable results.

This article is for data teams, AI product managers, and BI leaders looking to streamline analytics workflows, reduce dependence on manual SQL writing, and explore the next wave of AI-powered business intelligence. We’ll explain how LLM SQL agents work, highlight real-world examples, and share steps to get started.

3 LLM-powered SQL Agents

The demand for real-time insights and self-serve analytics has made LLM-powered SQL agents essential tools in a modern data stack. These agents empower non-technical and technical users, helping them query data using natural language. Let’s explore three standout tools in the space.

1. BlazeSQL

BlazeSQL is an LLM SQL agent built for teams that want to turn questions into insights in seconds. It simplifies how organisations interact with data, generating accurate SQL queries from plain English. Designed for speed and usability, BlazeSQL empowers technical and non-technical users to ask data questions in everyday language and receive ready-to-use SQL instantly. Beyond query generation, BlazeSQL also acts as a personal data analyst, helping users visualise results, create dashboards, and explore trends without writing a single line of code.

What LLM is BlazeSQL powered by?

It leverages state-of-the-art large language models (LLMs), including OpenAI’s GPT-4 and other sophisticated LLMs, depending on the specific use case. This unique multi-model approach ensures flexibility, resilience, and high accuracy across various business questions and data environments.

What sets BlazeSQL apart?

BlazeSQL sets itself apart in these fundamental areas:

1. Precision-first architecture: BlazeSQL is engineered for accuracy, not just plausibility. Its models are fine-tuned to understand user intent, business logic, and data structure, significantly reducing syntax errors and producing high-quality, reliable queries that data teams can trust.

2. Plug-and-play setup: BlazeSQL connects to your SQL database in seconds. Its plug-and-play integration makes onboarding seamless for anyone, allowing enterprises to unlock value without complexity or long setup cycles.

3. Schema-awareness reasoning: By integrating with your database metadata, BlazeSQL gains a deep understanding of table relationships, constraints, and data types. This allows it to generate context-aware queries that align with your actual schema, reducing the back-and-forth of query correction.

4. Full BI capability: BlazeSQL isn’t just a text-to-SQL tool; it’s a powerful, no-code business intelligence platform. Users can visualise results, build interactive dashboards, and share insights across the organisation. This makes it ideal for cross-functional teams looking to explore data collaboratively without needing technical expertise.

5. Enterprise-grade security and governance: BlazeSQL supports role-based access, data masking, and audit logging to ensure that sensitive information is only accessible to the right users. It’s built to meet enterprise compliance standards while maintaining ease of use.

Benefits for teams

- Enables self-service analytics for non-technical users across departments

- Reduces bottlenecks by minimising dependency on data engineers or analysts

- Accelerates decision-making with faster access to relevant insights

- Promotes data literacy by making data exploration intuitive and visual

- Ensures data governance with granular access controls and activity tracking

Use cases

- Create real-time SQL queries, visualisations, and dashboards by asking business questions in plain English

- Ask Blaze to explain the insights on the dashboard

- Automate recurring reports and track KPIs without manual effort

- Act as a centralised analytics layer between siloed teams and complex data environments

- Empower product, marketing, and ops teams to run ad hoc analysis independently

Features at a glance

- Text-to-SQL code generation using schema-aware reasoning

- Real-time visualisation and dashboard creation

- Automated report generation

- Enterprise-grade security and governance

- Slack Integration

- Embeddable in internal tools

Common challenges it overcomes

- Handling complex SQL queries: Automatically generates advanced SQL, including joins, subqueries, and aggregations, from plain English prompts, saving time and reducing errors.

- Data schema ambiguity: Uses live metadata from your database to understand table relationships, naming conventions, and constraints, producing schema-aware queries.

- Empowering non-technical users: Allows business users to access data and generate insights independently, without relying on data analysts or writing SQL.

- Boosting collaboration in siloed teams: Acts as a shared analytics layer, enabling cross-functional teams to access and share insights through dashboards and visualisations.

- Generating accurate SQL commands: Prioritises accuracy over plausibility by interpreting user intent and aligning queries with business context and schema.

- Ensuring data security and access control: Offers role-based access, audit trails, and permission settings to ensure secure data usage across departments.

- Overcoming training data limitations: Relies on live database integration rather than static model training, enabling up-to-date and context-relevant SQL generation.

If you are looking for a faster and simpler way to query data, book a free demo or start your trial today with BlazeSQL.

2. Metabase

Metabase is an open-source BI tool that enables teams to explore and visualise data. Known for its ease of use and flexibility, Metabase has been widely adopted by startups and mid-sized companies looking for an alternative to Tableau.

Which LLM powers Metabase?

Metabase leverages OpenAI’s language models, which possess strong contextual understanding, to convert plain English inputs into SQL queries.

Benefits:

- Users can ask questions about their data without SQL knowledge

- Reduced reliance on data teams for data retrieval

- Provides more people within the organisation with direct access and insights into data

Use Cases:

- Using natural language descriptions to fetch relevant data

- Automating standard business reports

- Integrating with SQL databases to enable basic data exploration.

- Supporting marketing or ops teams with quick access to filtered metrics.

Features:

- Text-to-SQL generation

- Dashboarding

- Reporting and sharing

- Basic schema awareness

3. Vanna

Vanna is a lightweight, open-source LLM SQL agent that translates plain English into executable SQL queries. It is a developer-first tool that can be self-hosted, integrated with custom user interfaces (UIs), and extended to fit various data stack architectures.

Which LLM powers Vanna?

Vanna is designed to be LLM-agnostic but is often paired with OpenAI’s GPT-4 by default. It can also integrate with LLMs such as Claude, Meta’s LLaMa models, or self-hosted LLM via API.

Benefits:

- Reduced dependence on analysts

- Improved query accuracy

- Scalable across small data teams or enterprise-grade use.

Use Cases:

- To build internal analytics tools where users can ask business questions and get SQL statements.

- It can power early versions of intelligent data products that let users interact with data through conversation.

- Non-technical users can automate recurring queries without needing SQL scripts.

Key features:

- SQL code generator

- Self-hosting options

- Schema-aware training

- Embeddable in internal tools.

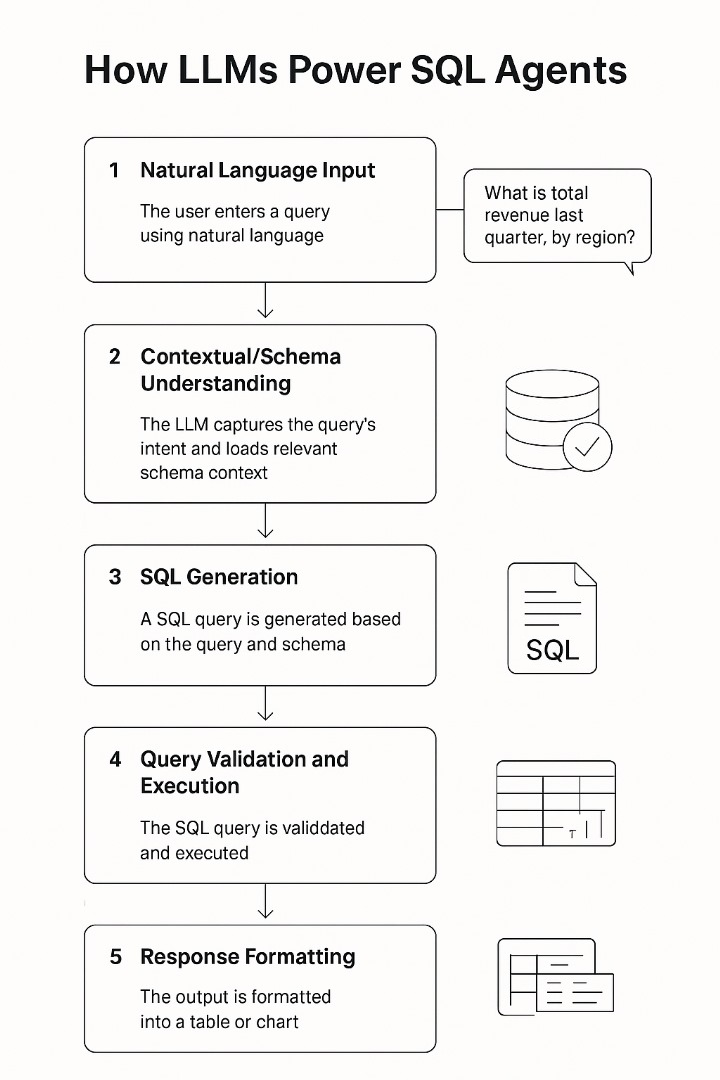

How LLMs Power SQL Agents

Every LLM SQL agent has a sophisticated pipeline that translates natural language into executable SQL queries. Below is a layered explanation of how this process works.

1. Natural Language Input

The process begins with the question you ask in plain English (or other supported natural language). This question is often captured by a chat box, a search bar, or any text input field provided by the SQL agent. This is where the LLM SQL agent understands the user's intent and begins parsing to better understand.

For example, the prompt could be, “Show me the total revenue from new customers last quarter, broken down by region.”

2. Contextual/Schema Understanding

Here, the LLM SQL agent understands the database schema, which includes tables, columns, relationships, and data types. This allows the SQL agent to connect the natural language input to the actual database structure.

A technique often used here is Retrieval-Augmented Generation (RAG). The system works by first retrieving relevant information from your database schema and then feeding the context to the LLM, along with your question. Doing this allows the agent to have a clearer understanding of the data you are referring to.

For example, if the database contains customers, transactions, and regions tables, the SQL agent will use context such as:

- Table relationships

- Column definitions

- Sample queries

This removes guesswork and ensures the agent aligns its interpretation with how your data is structured.

3. SQL Generation

This step is where the agent works its magic to construct a syntactically correct SQL query after understanding both the user’s intent and the data schema.

This is a sample output for the natural language input above:

SELECT r.region_name, SUM(t.amount) AS total_revenue

FROM transactions t

JOIN customers c ON t.customer_id = c.id

JOIN regions r ON c.region_id = r.id

WHERE c.is_new = TRUE AND t.transaction_date BETWEEN ‘2025-01-01’ AND ‘2025-03-31’

GROUP BY r.region_name;

4. Query Validation and Execution

A good LLM SQL agent will run a validation step before presenting the result or executing the SQL query against the connected database. Some of the validation steps include:

- Syntax checking

- Permission checks

- Data type validation

5. Response Formatting

Finally, the results of the executed SQL query will be presented to you (the user) in a user-friendly display. If the SQL agent has modern integration with a Gen BI tool such as BlazeSQL, the output can be:

- Line or bar charts for trends

- Tables with interactive filters

- Pie charts for proportions

Common LLM Frameworks Upon Which SQL Agents Are Built

Every powerful LLM SQL agent is powered by a large language model (LLM) framework. Let’s consider a brief overview of the strengths, limitations, and ideal use cases of some prominent ones:

Claude 3.5 & 3.7 Sonnet (Anthropic):

- Strengths: Strong contextual reasoning, safety alignment, understanding schema relationships, handling multi-turn queries, and explaining results in natural language.

- Limitations: Requires prompt engineering or fine-tuning to accurately match domain-specific SQL patterns. Can be less focused on code generation compared to models trained for it.

- Ideal use cases: Scenarios requiring a high degree of natural language understanding for ambiguous or complex data questions, especially for enterprise data teams.

Gemini 1.5 Pro (Google):

- Strengths: Great at integrating real-time data insights. Performs well in semantic search and multi-modal scenarios. Has a huge context window that allows it to process and understand very large amounts of information.

- Limitations: Has a lower performance in complex, nested SQL generation compared to GPT-4 and Claude in some real-world scenarios.

- Ideal use cases: Integrating SQL generation within existing Google Cloud Platform (GCP) environments, scenarios where the database schema is large or complex, and cases where real-time data intelligence is highly needed.

GPT-4 (OpenAI):

- Strengths: Highly capable of performing various tasks, including SQL code generation. It’s popular, versatile, and widely adopted in the production of LLM SQL agents. It has a large ecosystem of tools and integrations.

- Limitations: More expensive to run than other models. It may lack contextual understanding without schema injection or RAG techniques.

- Ideal use cases: Great for general-purpose LLM SQL agents where strong performance and reliability are essential.

LLaMA 3 70B / 8B (Meta):

- Strengths: Their open-source nature makes them more transparent and flexible. The 70B model has high reasoning capabilities. The 8B model provides a good balance between efficiency and performance.

- Limitations: Requires heavy fine-tuning to match the SQL generation accuracy of GPT-4 or Claude, which means it requires more technical expertise.

- Ideal use cases: Excellent for data teams seeking greater control, privacy, and customisation over the underlying model, particularly within regulated industries.

Mistral 7B & Mixtral1:

- Strengths: Lightweight and efficient open-source models. Mixtral’s Mixture-of-Experts architecture enhances reasoning and performance.

- Limitations: It requires more technical expertise, similar to LLaMa.

- Ideal use cases: Excellent for cost-sensitive deployments that require speed. Also great in environments where strong reasoning for SQL generation is vital.

DeepSeek Chat v3:

- Strengths: Specially trained in code and math, excelling in code generation tasks.

- Limitations: Relatively new in the LLM SQL market compared to GPT-4. It might require more stability and consistency checks.

- Ideal use cases: Shows great potential for developer-centric environments. It could also be useful for teams looking to experiment with newer models that blend conversational AI with programming logic.

BlazeSQL: Your Ready-Made LLM SQL Agent

Throughout this article, we've explored the power and potential of LLM SQL agents in transforming how data teams interact with data to generate the SQL queries needed to unlock business insights.

If you're looking to move faster with data, without getting stuck writing or reviewing SQL queries, BlazeSQL provides your team with exactly what it needs: a robust and reliable LLM SQL agent that helps you generate the SQL queries you need using natural language.

It is purpose-built with a strong foundation in accuracy, security, and production readiness for data teams, AI product managers, and BI leaders who want lightning-fast insights.

Ready for fewer bottlenecks and more intelligence?

Frequently Asked Questions

Are LLM SQL agents safe to use with sensitive data? Yes, most enterprise-grade LLM SQL agents include robust security features such as role-based access control, data encryption, and audit logging. These measures ensure that sensitive data is protected and only accessible to authorised users. However, always review your provider’s compliance and security policies before integration.

What databases can LLM SQL agents connect to? LLM SQL agents support a wide range of SQL databases, including traditional on-premises systems like MySQL, PostgreSQL, Microsoft SQL Server, and Oracle. They also connect to cloud-based data warehouses such as Snowflake, BigQuery, and Redshift. Before integration, it’s essential to confirm compatibility with your specific database and environment.

What is RAG, and how does it help LLM SQL agents? RAG stands for Retrieval-Augmented Generation. It enhances LLM SQL agents by combining real-time data retrieval with language generation. This means the agent can dynamically access up-to-date database schema or external documents, improving the accuracy and relevance of generated SQL queries.

Are LLM SQL agents good enough for complex analytics? LLM SQL agents excel at simplifying many analytical tasks and speeding up query generation, especially for complex requests. However, very complex analytics requiring deep domain expertise or highly customised queries may still need skilled data professionals. These agents work best when integrated as part of a broader analytics workflow.